一、背景

苹果在 iOS 13 支持了多摄,iPhone XS 以上设备支持。

https://developer.apple.com/videos/play/wwdc2019/225

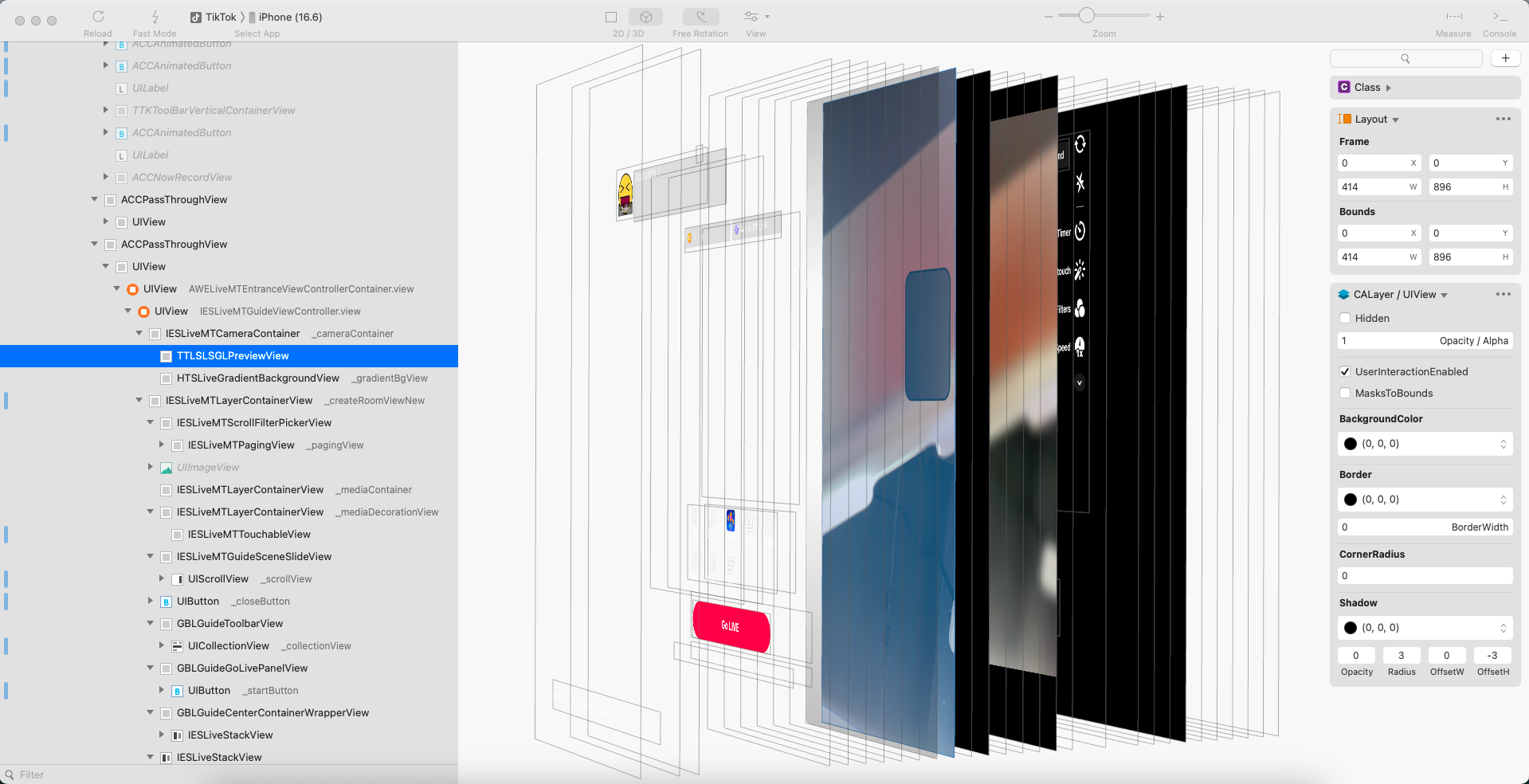

二、TikTok 相关调研

1、相机

打开相机或关闭双摄

<<<< FigCaptureSession >>>> captureSession_commitInflightConfiguration: <0xe9aa2d480[1156][com.zhiliaoapp.musically]> committing configuration FigCaptureSessionConfiguration 0xe9ab39c10: ID 30, AVCaptureSessionPreset1280x720 multiCam: 0, appAudio: 1, autoConfig: 1, runWhileMultitasking: 0

VC 0xe9ab28390: <SRC:Wide back 420f/1280x720, 25-25(max:30), Z:1.00, ICM:0, (FD E:0 B:0 S:0), HR:1, FaceDrivenAEAFMode:3, FaceDrivenAEAFEnabledByDefault:1> -> <SINK 0xe9ab2c430:Iris movies:0, suspended:0, preserveSuspended:0, movieDur:nans, trim:0, 0fps, preparedID:12, (QHR ON) maxQuality:1, (maxPhotoDims 4224x2376)>, /0x0, E:1, VIS:0, M:0, O:Unspecified, DOC:0, RBC:0, CIM:0

VC 0xe9ab2c4f0: <SRC:Wide back 420f/1280x720, 25-25(max:30), Z:1.00, ICM:0, (FD E:0 B:0 S:0), HR:1, FaceDrivenAEAFMode:3, FaceDrivenAEAFEnabledByDefault:1> -> <SINK 0xe9ab7a070:VideoData discards:1, preview:0, stability:0>, 420f/720x1280, E:1, VIS:1, M:0, O:Portrait, DOC:0, RBC:12, CIM:0

第一个 VC 对应的是 AVCapturePhotoOutput。

打开双摄

<<<< FigCaptureSession >>>> captureSession_commitInflightConfiguration: <0xe9da26050[1156][com.zhiliaoapp.musically]> committing configuration FigCaptureSessionConfiguration 0xe9ab82cf0: ID 24, AVCaptureSessionPresetInputPriority multiCam: 1, appAudio: 1, autoConfig: 1, runWhileMultitasking: 0

VC 0xe9ab831a0: <SRC:Wide back 420f/1280x720, 25-25(max:60), Z:1.00, ICM:0, (FD E:0 B:0 S:0), FaceDrivenAEAFMode:3, FaceDrivenAEAFEnabledByDefault:1> -> <SINK 0xe9ab83250:VideoData discards:1, preview:0, stability:0>, 420f/720x1280, E:1, VIS:1, M:0, O:Portrait, DOC:0, RBC:12, CIM:0

VC 0xe9ab832c0: <SRC:Wide back 420f/1280x720, 25-25(max:60), Z:1.00, ICM:0, (FD E:0 B:0 S:0), FaceDrivenAEAFMode:3, FaceDrivenAEAFEnabledByDefault:1> -> <SINK 0xe9abab6d0:Iris movies:0, suspended:0, preserveSuspended:0, movieDur:nans, trim:0, 0fps, preparedID:8, (QHR ON) maxQuality:1, (maxPhotoDims 2112x1188)>, /0x0, E:1, VIS:0, M:0, O:Unspecified, DOC:0, RBC:0, CIM:0

VC 0xe9abab7e0: <SRC:Wide front 420f/1280x720, 25-25(max:60), Z:1.00, ICM:0, (FD E:0 B:0 S:0), FaceDriven

2、合流

回显前合流

三、相机模块

1、相关接口

-

AVCaptureMultiCamSession - isMultiCamSupported - hardwareCost - systemPressureCost - addInputWithNoConnections - addOutputWithNoConnections

-

AVCaptureDevice - supportedMultiCamDeviceSets

2、异常处理

- 硬件可能不支持;

- hardwareCost 大于 1.0 时,启动相机时会收到一个 AVCaptureSessionRuntimeErrorNotification 通知;

- systemPressureCost 大于 1.0 时,会收到一个 AVCaptureSessionWasInterruptedNotification 通知;

开启双摄(openCamera)前检查兼容性及 hardwareCost,不符合条件则返回失败,并通知客户端做相应提示。

开播过程中收到 systemPressureCost 重试策略:降采集分辨率 -> 降帧率 -> 切换单摄。

3、相机配置

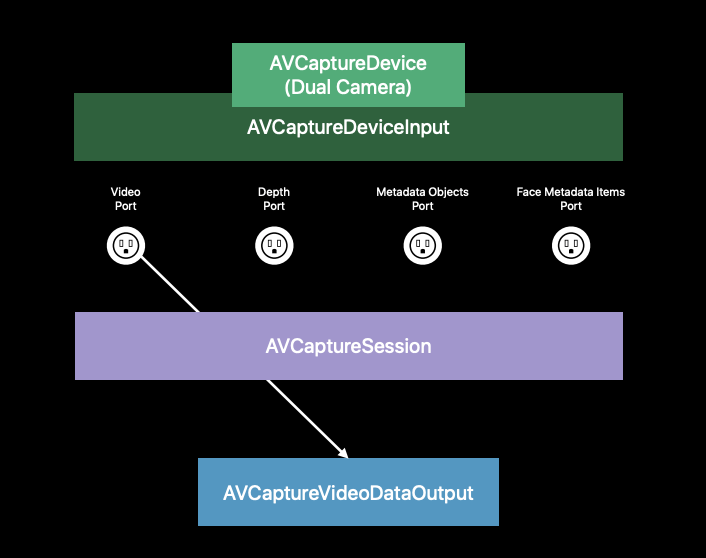

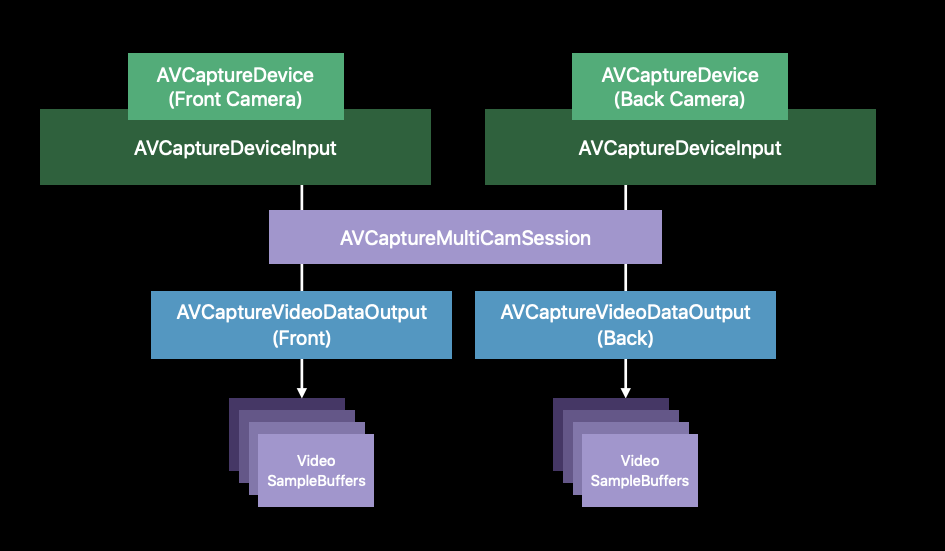

一个 AVCaptureDeviceInput 可能会有多个端口(Ports),把对应的端口跟 AVCaptureVideoDataOutput 连接起来的就是 AVCaptureConnection。

在以往的使用中,AVCaptureSession 使用addInput和addOutput添加 input 和 output 时,系统会添加对应的 Connection。

在 AVCaptureMultiCamSession 中避免隐式添加 Connection(WWDC 2019 session),使用addInputWithNoConnections和addOutputWithNoConnections添加相关 inputs 和 outputs。

手动创建 AVCaptureConnection,指定对应 port 到 output,再把 Connection 添加到 Session。

前后相机分别创建对应 AVCaptureDeviceInput,AVCaptureVideoDataOutput,AVCaptureConnection

4、采集分辨率

参考 TikTok,主副相机初始化为相同的分辨率,避免切换前后相机时需要重启相机。

5、相机操作

enum class CaptureSceneType : uint64_t

{

...

kSceneVideo_Dual = 1 << 12, //!< 视频双摄像机,默认主摄为后置

kSceneVideo_Dual_Front = SCENE_SUBTYPE(kSceneVideo_Dual, 1), //!< 视频双摄像机,主摄为前置

kSceneVideo_Dual_Rear = SCENE_SUBTYPE(kSceneVideo_Dual, 2), //!< 视频双摄像机,主摄为后置

};

新建 HEMultiCameraIOS HEMultiCameraImpl 类,处理相机相关的操作。

新增双摄的 cam_v2::CaptureSceneType,会在HECameraSystemInner::_handleOpen里通过HECameraDecision::createCameraByType 创建对应的 HEMultiCameraImpl,

6、相机切换

单摄与双摄之间的切换,参考 ARKit 切换:先设置对应的 cam_v2::CaptureSceneType,并重新调用 openCamera。

单摄与双摄切换时需要切换 session,所以会重启相机,画面会有明暗变化,已同步到产品

如果只是临时关闭双摄,可使用:frontCameraInputVideoPort.enabled = false

7、图像回调

把需要添加特效的相机定义为主相机。

在 Demo 中,主副相机的视频帧异步回调。

由主相机回调来驱动视频帧回调到前处理链路,若主相机回调时副相机还没有数据,则沿用上一帧副相机数据。

8、主副相机切换

前处理链路对 HEFrame 做完整的前处理,对 HEFrame.secondaryFrame 只做格式转换及合流。

主副相机切换时需要考虑是否固定前置相机添加特效,

1. 固定前置相机添加特效

把前置相机的图像固定赋值到 HEFrame,把后置相机的图像固定赋值到 HEFrame.secondaryFrame

进行主副相机切换时,只需要调整合流 Entity 的层级即可。

2. 固定主相机添加特效

把主相机的图像固定赋值到 HEFrame,把副相机的图像固定赋值到 HEFrame.secondaryFrame

进行主副相机切换时,交换赋值的位置即可

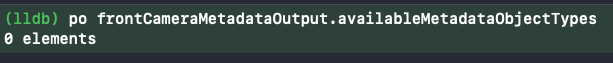

9、测光对焦

目前看在 AVCaptureMultiCamSession 中获取不到人脸信息,可能不能以此实现人脸测光对焦。

经产品初步确认,需可对主画面进行 EV、ZOOM 操作

10、合流

#include <metal_stdlib>

using namespace metal;

struct MixerParameters

{

float2 pipPosition;

float2 pipSize;

float2 pipScaleSize;

float2 pipOffset;

float cornerRadius;

float blurSize;

};

constant sampler kBilinearSampler(filter::linear, coord::pixel, address::clamp_to_edge);

float4 blur(texture2d<float, access::sample> pipInput, float2 uv, float blurSize) {

float4 color = float4(0.0);

color += pipInput.sample(kBilinearSampler, uv + float2(-blurSize, -blurSize)) * 0.0625;

color += pipInput.sample(kBilinearSampler, uv + float2( blurSize, -blurSize)) * 0.0625;

color += pipInput.sample(kBilinearSampler, uv + float2(-blurSize, blurSize)) * 0.0625;

color += pipInput.sample(kBilinearSampler, uv + float2( blurSize, blurSize)) * 0.0625;

color += pipInput.sample(kBilinearSampler, uv + float2(0.0, -blurSize)) * 0.125;

color += pipInput.sample(kBilinearSampler, uv + float2(0.0, blurSize)) * 0.125;

color += pipInput.sample(kBilinearSampler, uv + float2(-blurSize, 0.0)) * 0.125;

color += pipInput.sample(kBilinearSampler, uv + float2( blurSize, 0.0)) * 0.125;

color += pipInput.sample(kBilinearSampler, uv) * 0.25;

return color;

}

// Compute kernel

kernel void reporterMixer(texture2d<float, access::read> fullScreenInput [[texture(0)]],

texture2d<float, access::sample> pipInput [[texture(1)]],

texture2d<float, access::write> outputTexture [[texture(2)]],

const device MixerParameters& mixerParameters [[buffer(0)]],

uint2 gid [[thread_position_in_grid]])

{

uint2 pipPosition = uint2(mixerParameters.pipPosition);

uint2 pipSize = uint2(mixerParameters.pipSize);

uint2 pipScaleSize = uint2(mixerParameters.pipScaleSize);

uint2 pipOffset = uint2(mixerParameters.pipOffset);

float cornerRadius = mixerParameters.cornerRadius;

float blurSize = mixerParameters.blurSize;

float4 output = fullScreenInput.read(gid);

bool withinPipBounds = gid.x >= pipPosition.x &&

gid.y >= pipPosition.y &&

gid.x < pipPosition.x + pipSize.x &&

gid.y < pipPosition.y + pipSize.y;

if (withinPipBounds) {

float2 center = float2(pipSize) * 0.5;

uint2 localPos = gid - pipPosition;

float2 pipCoord = float2(localPos) - center;

float2 edgeDistVec = abs(pipCoord) - (center - float2(cornerRadius));

float2 edgeDist = max(edgeDistVec, float2(0.0));

float edgeDistance = length(edgeDist);

float feather = 1.0;

float alpha = 1.0 - smoothstep(cornerRadius - feather, cornerRadius + feather, edgeDistance);

if (alpha > 0.0) {

float2 pipSamplingCoord = float2(gid - pipPosition + pipOffset) * float2(pipInput.get_width(), pipInput.get_height()) / float2(pipScaleSize);

float4 pipColor = blur(pipInput, pipSamplingCoord, blurSize);

output = mix(output, pipColor, alpha);

}

}

outputTexture.write(output, gid);

}